ブログ:

Embedded Linux Medical Research Device, Part 1: Requirements, GStreamer, and Docker

This blog is part one of a series about Embedded Linux Medical Research Devices, their requirements, existing tools, and solutions.

MAB Labs Embedded Solutions recently partnered with Linear Computing Inc., to develop a medical research product based on the Toradex Verdin iMX8M Plus System on Module. Linear Computing developed the custom carrier board and accompanying hardware, and MAB Labs implemented all the embedded software. In this blog post, we will discuss the premise and requirements of the project along with the first set of hurdles we had to overcome.

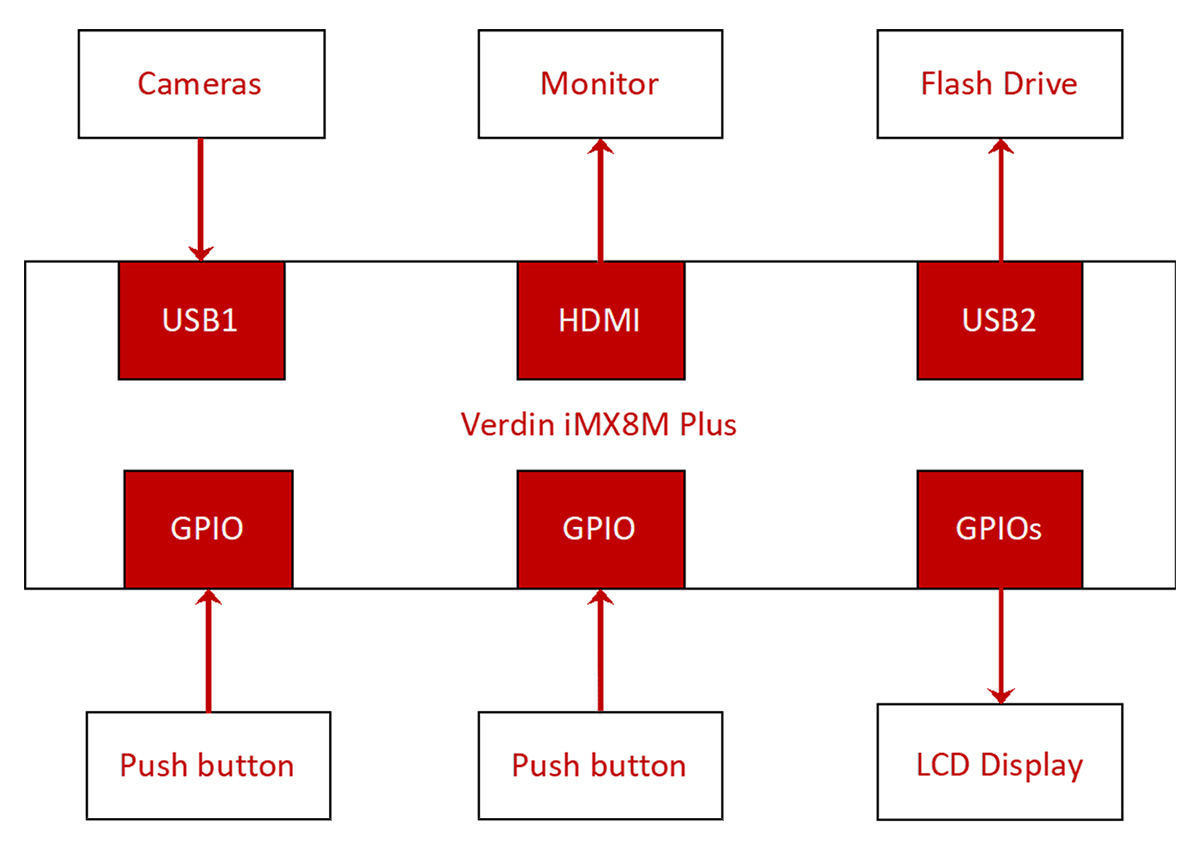

The following block diagram provides an overview of the system:

- The device has the following peripherals:

- Four cameras are connected to one USB port on the Verdin iMX8M Plus SoM via a USB hub.

- A USB flash drive is connected to the other USB port.

- A two-line LCD display is connected to GPIOs on the Verdin iMX8M Plus SoM.

- The display can be controlled by exercising the GPIOs.

- A monitor is connected to the HDMI port of the SoM.

- Two push buttons are connected to GPIOs on the Verdin iMX8M Plus SoM.

- The following describes the device’s behavior:

- On boot-up, the video feed from the first camera should be displayed over HDMI.

- When one of the buttons is pressed, video at 1920x1080@30 and audio from all four cameras should be recorded simultaneously.

- The recording from each camera should be saved as a separate file on the USB flash drive.

- The display should indicate that the recording is in progress. A similar message should be displayed over HDMI.

- When the same button is pressed, the recording should stop.

- The LED display should indicate that the recording has stopped.

- The video feed from the first camera should be displayed over HDMI.

- When the second button is pressed while recording is not taking place, the HDMI port should display the feed from a successive camera.

Based on the above requirements, our intuition was to use GStreamer (https://gstreamer.freedesktop.org). GStreamer is the de facto framework used to operate on videos for playback, recording, or even transforming videos, such as resizing. A GStreamer invocation is called a “pipeline” and consists of different elements chained together to operate on media. Our first task was to build up the GStreamer pipeline on the command line incrementally. Once we have a pipeline that can record video from all four cameras at 1920x1080@30, we can proceed with the implementation.

ARG BASE_NAME=wayland-base-vivante

ARG IMAGE_ARCH=linux/arm64/v8

ARG IMAGE_TAG=2

ARG DOCKER_REGISTRY=torizon

FROM --platform=$IMAGE_ARCH $DOCKER_REGISTRY/$BASE_NAME:$IMAGE_TAG

ARG IMAGE_ARCH

RUN apt-get -y update && apt-get install -y --no-install-recommends \

libgstreamer1.0-0 \

gstreamer1.0-plugins-base \

gstreamer1.0-plugins-good \

gstreamer1.0-plugins-bad \

gstreamer1.0-plugins-ugly \

gstreamer1.0-libav \

gstreamer1.0-doc \

gstreamer1.0-tools \

gstreamer1.0-x \

gstreamer1.0-alsa \

gstreamer1.0-gl \

gstreamer1.0-gtk3 \

gstreamer1.0-pulseaudio \

v4l-utils \

alsa-utils \

libsndfile1-dev \

pulseaudio \

gstreamer1.0-qt5; fi \

&& apt-get clean && apt-get autoremove && rm -rf /var/lib/apt/lists/*

CMD ["/bin/bash"]

In addition to the packages added by the Dockerfile in the Toradex sample, we also added the “alsa-utils,” “libsndfile1-dev”, and “pulseaudio” packages so that we can use GStreamer to record both audio and video. Finally, we added a line at the end so the Docker container would drop us into a bash terminal on startup. That would allow us to test different GStreamer invocations.

torizon@verdin-imx8mp-07331436:~$ docker build -t camera-test .

torizon@verdin-imx8mp-07331436:~$ docker run --privileged -v/var/rootdirs/media:/media:z -it camera-test

torizon@25a572db9aef:/$

torizon@25a572db9aef:/$ ls -l /dev/video* crw-rw---- 1 root video 81, 0 Apr 17 11:39 /dev/video0 crw-rw---- 1 root video 81, 1 Apr 17 11:39 /dev/video1

Why are there two video devices when we have only plugged in one camera? “/dev/video0” is the video feed from the camera, and “/dev/video1” is the metadata associated with the feed. We will only be interested in “/dev/video0” for our application.

torizon@2f94978f1489:/$ v4l2-ctl -d /dev/video0 --list-formats

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture

[0]: 'MJPG' (Motion-JPEG, compressed)

[1]: 'YUYV' (YUYV 4:2:2)

We will want to select MJPG when using GStreamer since it is compressed and will require less bandwidth over USB when compared to YUYV, which is uncompressed.

torizon@2f94978f1489:/$ v4l2-ctl -d /dev/video0 --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture

[0]: 'MJPG' (Motion-JPEG, compressed)

Size: Discrete 3840x2160

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

.

.

.

Size: Discrete 1920x1080

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

The above listing shows that the camera supports 30 fps at 1920x1080, which we need for our GStreamer application.

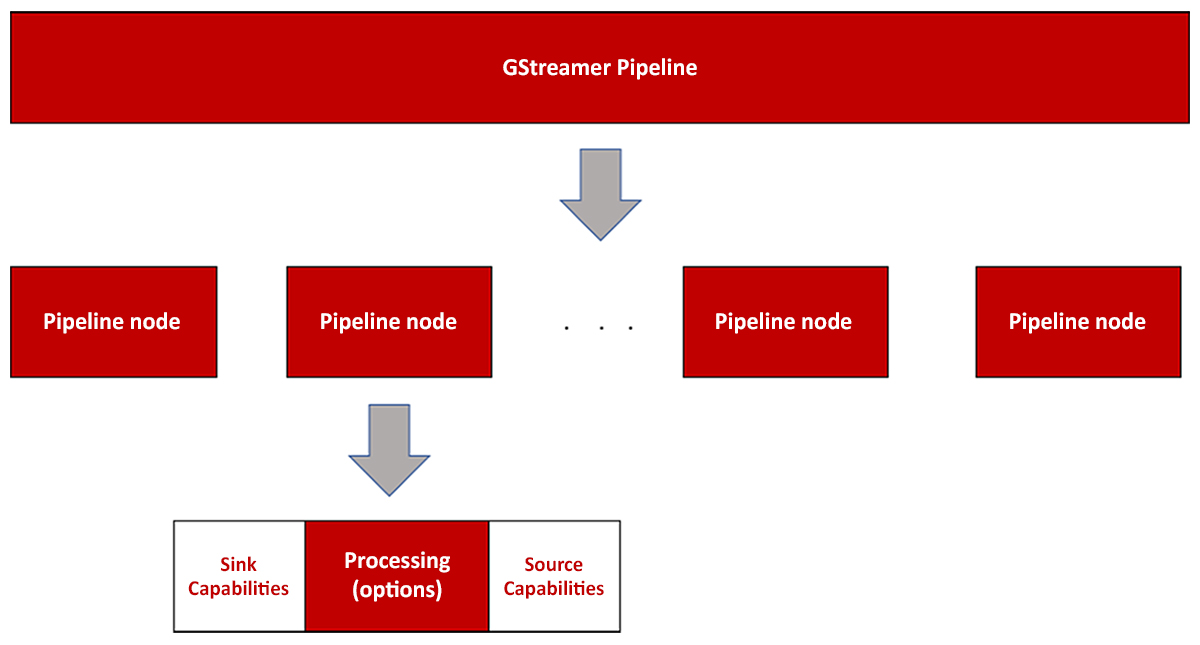

We can assemble our GStreamer pipeline with the information collected above. While a GStreamer pipeline may look foreign during a first encounter, it becomes easier to understand if we keep the following image in mind:

A GStreamer pipeline consists of multiple nodes chained together using the exclamation point character “!”. Various pipelines can be created in a single invocation of GStreamer by separating them with a space. Each pipeline node performs some processing and can be configured by passing it some options using a space or a comma.

Most GStreamer novices have trouble assembling a pipeline. They are sometimes presented with arcane error messages that are difficult to troubleshoot immediately. One thing to remember that helps when building a GStreamer pipeline is that all nodes have specific “sink” capabilities, which is the type of data they can ingest. Nodes also have “source” capabilities, the data type they generate. When connecting nodes in a GStreamer pipeline, we must ensure that one node's sink capabilities match the next node's source capabilities. Similarly, a node's source capabilities must match the previous node's sink capabilities.

torizon@29e6ae2dc62d:~$ gst-launch-1.0 v4l2src device=/dev/video0 ! image/jpeg,width=1920,height=1080,framerate=30/1 ! avimux ! filesink location=./test0.avi

- gst-launch-1.0: This is the application we will use for our GStreamer pipeline.

- v4lsrc: This node is used to capture from a v4l2 device (i.e., our webcam).

- device=/dev/video0: This instructs the v4l2src to use the “/dev/video0” for the capture.

- image/jpeg,width=1920,height=1080,framerate=30/1: This instructs both the webcam and GStreamer that we wish to generate a MJPG stream, using a resolution of 1920x1080 at 30 fps.

- avimux: This node encapsulates the video feed in an AVI container.

- filesink: This node saves the encapsulated video feed to a file.

Setting pipeline to PAUSED ... Pipeline is live and does not need PREROLL ... Pipeline is PREROLLED ... Setting pipeline to PLAYING ... New clock: GstSystemClock ^Chandling interrupt. Interrupt: Stopping pipeline ... Execution ended after 0:00:03.759970136 Setting pipeline to NULL ... Freeing pipeline ... torizon@29e6ae2dc62d:~$

We can transfer the file to a desktop PC and play it using an application such as VLC.

torizon@29e6ae2dc62d:~$ gst-launch-1.0 v4l2src device=/dev/video0 ! image/jpeg,width=1920,height=1080,framerate=30/1 ! avimux ! filesink location=./test0.avi v4l2src device=/dev/video2 ! image/jpeg,width=1920,height=1080,framerate=30/1 ! avimux ! filesink location=./test1.avi

The pipeline format is identical to before, except that the device and the file are different (highlighted in bold above). We’ve added the pipeline for the second camera to the pipeline for the first camera by adding a space in between.

Setting pipeline to PAUSED ... Pipeline is live and does not need PREROLL ... Pipeline is PREROLLED ... Setting pipeline to PLAYING ... New clock: GstSystemClock ERROR: from element /GstPipeline:pipeline0/GstV4l2Src:v4l2src1: Failed to allocate required memory. Additional debug info: ../sys/v4l2/gstv4l2src.c(659): gst_v4l2src_decide_allocation (): /GstPipeline:pipeline0/GstV4l2Src:v4l2src1: Buffer pool activation failed ERROR: from element /GstPipeline:pipeline0/GstV4l2Src:v4l2src1: Internal data stream error. Additional debug info: ../libs/gst/base/gstbasesrc.c(3127): gst_base_src_loop (): /GstPipeline:pipeline0/GstV4l2Src:v4l2src1: streaming stopped, reason not-negotiated (-4) Execution ended after 0:00:00.139152205 Setting pipeline to NULL ... Freeing pipeline ...

torizon@29e6ae2dc62d:~$ dmesg . . . [10768.346378] usb 1-1.3: Not enough bandwidth for new device state. [10768.346391] usb 1-1.3: Not enough bandwidth for altsetting 11

We will describe how we overcame this error in a future blog post.

In this blog post, we saw the process of prototyping a GStreamer pipeline in a Docker container on a Toradex Verdin iMX8M Plus SoM. We saw how to build and run a Docker container to support GStreamer and other tools needed. We learned to use v4l2-ctl to identify the device representing our camera in embedded Linux and the formats, resolutions, and frame rates the camera supports. We then briefly saw how to build a GStreamer pipeline using a single camera. We learned how to combine pipelines for multiple cameras, which failed.

Stay tuned for more posts on this topic.

Mohammed Billoo, Embedded Software Consultant, MAB Labs

Mohammed Billoo, Embedded Software Consultant, MAB Labs